In 2023, Broadcom acquired VMware and immediately announced the end of perpetual licences, termination of the free version of ESXi, and the switch to a subscription model. This decision has meant many companies around the world have experienced costs rising by 100-300%, prompting them to look for new alternatives.

Davidson has chosen to launch a pilot with the KVM solution, an open-source virtualisation solution, for our OKD (Openshift) containerisation platform.

Choosing an open-source platform has many benefits, including no licensing fees, autonomy in relation to proprietary vendors and freedom of use

What is OKD?

In the containerisation world, OKD (OpenShift) is a container orchestration platform designed to accelerate application development, deployment, and scaling. It enables developers and operational teams to effectively manage applications’ lifecycles over the long term.

What’s the difference between OKD and OpenShift?

According to Red Hat documentation, OKD is the basis for OpenShift. Initially known as OpenShift Origin, OKD is now recognised as “a community project comprising software components that run above Kubernetes”. In other words, OKD is the community version of OpenShift.

OKD in Davidson’s IT department

The YODA team manages around fifty internal applications for Davidson. Most of these applications are hosted on the OKD platform. It is used to simplify the development, deployment, and management of these container-based applications.

POC objective

Currently, at YODA, we use OKD in conjunction with VMware vSphere, a virtualisation solution, to deploy, run and manage the lifecycle of our applications. VMware is a leader in virtualisation, offering the ESXi hypervisor and the vSphere platform. However, these solutions require paid licences. The objective of our Proof of Concept (POC) is to find and assess an optimised virtualisation alternative for OKD. This alternative must offer a lower total cost of ownership while being as powerful, high-performing, simple, stable, and secure as VMware.

Comparison between KVM and VMware for virtualisation

- Architecture

KVM works as a module of the Linux kernel, meaning it is tightly integrated with the host operating system. KVM virtual machines are managed as normal Linux processes, allowing compatibility with all standard Linux tools and easy management via system commands.

VMware ESXi, on the other hand, is a type 1 hypervisor that runs directly on the hardware, without an intermediary. This minimises the load on the host system and provides strong isolation between virtual machines, thereby reducing the risk of conflicts.

- Performance

KVM has excellent performance thanks to its proximity to the Linux kernel and efficient use of hardware virtualisation extensions. However, the need for a host operating system can introduce a slight overload.

VMware, with ESXi, typically offers superior performance because it runs directly on the hardware. This architecture minimises latency and maximises efficiency, which is crucial for environments that require peak performance.

- Management and administration

KVM uses tools like libvirt, virt-manager, and other CLI interfaces to manage virtual machines. These tools, although powerful, may require a learning curve and be less user-friendly than modern graphical interfaces.

VMware provides sophisticated, well-designed management solutions, such as vCenter, that make it easy to centrally manage large-scale virtualisation environments. These tools offer advanced functionality for automation, monitoring and orchestration.

- Scalability and resilience

KVM can scale efficiently to meet growing needs with features such as live VM migration. However, additional effort may be needed for advanced configuration for very large environments.

VMware is known for its ability to easily scale and manage complex environments. Features such as high availability (HA), fault tolerance and clustering are built-in, making it an ideal choice for critical scenarios where resilience is essential.

- Security

KVM benefits from Linux inherent security, with features like SELinux and AppArmor adding additional layers of protection. However, security depends largely on the configuration and management of the host environment.

VMware offers robust, integrated security solutions, such as VMware NSX for network security virtualisation. VMware environments are often certified to meet high security standards, which is an asset for organisations that require enhanced security.

- Cost

As KVM is open source, it is generally less expensive than commercial solutions. There is no licensing fee for using KVM, making it an attractive option for low-budget organisations. The associated costs are mainly related to support and management.

Although VMware is more expensive, it offers a range of features and services that often warrant the investment. Costs include licences for the ESXi hypervisor, vCenter for centralised management, and other add-on modules. For large companies, these costs can be offset by the benefits in terms of performance, management and resilience.

- Ecosystem and support

KVM, as an open source solution, has a vast ecosystem and a dynamic community. Several Linux distributions integrate it, and many resources and forums are available for support. However, in order to benefit from sales support, purchasing third-party services may be required.

VMware offers a mature ecosystem with a wide range of complementary products and partners. VMware’s commercial support is renowned for its quality with extensive support options available to enterprises. The VMware ecosystem is also well integrated with partner solutions for extended functionality.

So why choose KVM?

KVM (Kernel-based Virtual Machine) is an open source virtualisation technology that converts the Linux kernel into a powerful hypervisor. It effectively virtualises enterprise workloads and provides all the functionality needed to manage both physical and virtual infrastructures, all at a lower operating cost.

The KVM solution strong points:

- Cost:

- Cost-effective: as an open source technology, KVM does not incur any additional licensing costs. This significantly reduces the total cost of ownership (TCO) compared to proprietary virtualisation solutions.

- Open Source:

- Innovation and Flexibility: access to the KVM source code allows developers to innovate and tailor the solution to their specific needs. This fosters great flexibility in managing and developing virtual environments.

- Independence: by using KVM, companies avoid relying on a single vendor, giving them freedom in choosing and managing their infrastructure.

- Performance:

- High Performance: KVM delivers performance close to native hardware, without overloading a hypervisor layer. This means that applications run faster and more efficiently than other hypervisors.

- Interoperability: KVM provides excellent compatibility between Linux and Windows platforms, allowing seamless management of heterogeneous systems.

- Compatibility and integration:

- Linux kernel integration: thanks to its direct integration into the Linux kernel, KVM is widely adopted in Linux-based environments. It takes advantage of Linux’s strengths, including security, memory management, process scheduling, network and peripheral device drivers.

- Integration with OKD: its compatibility with Red Hat distributions and close integration with Kubernetes-based solutions, such as OKD, make it an attractive option for container deployments.

- Easy to use:

- Centralised management: KVM makes it easy and efficient to create, start, stop, pause, migrate and model hundreds of virtual machines. KVM offers centralised and simplified management of virtual environments, whether on varied hardware or software.

Setting up an OKD cluster with KVM: the prerequisites

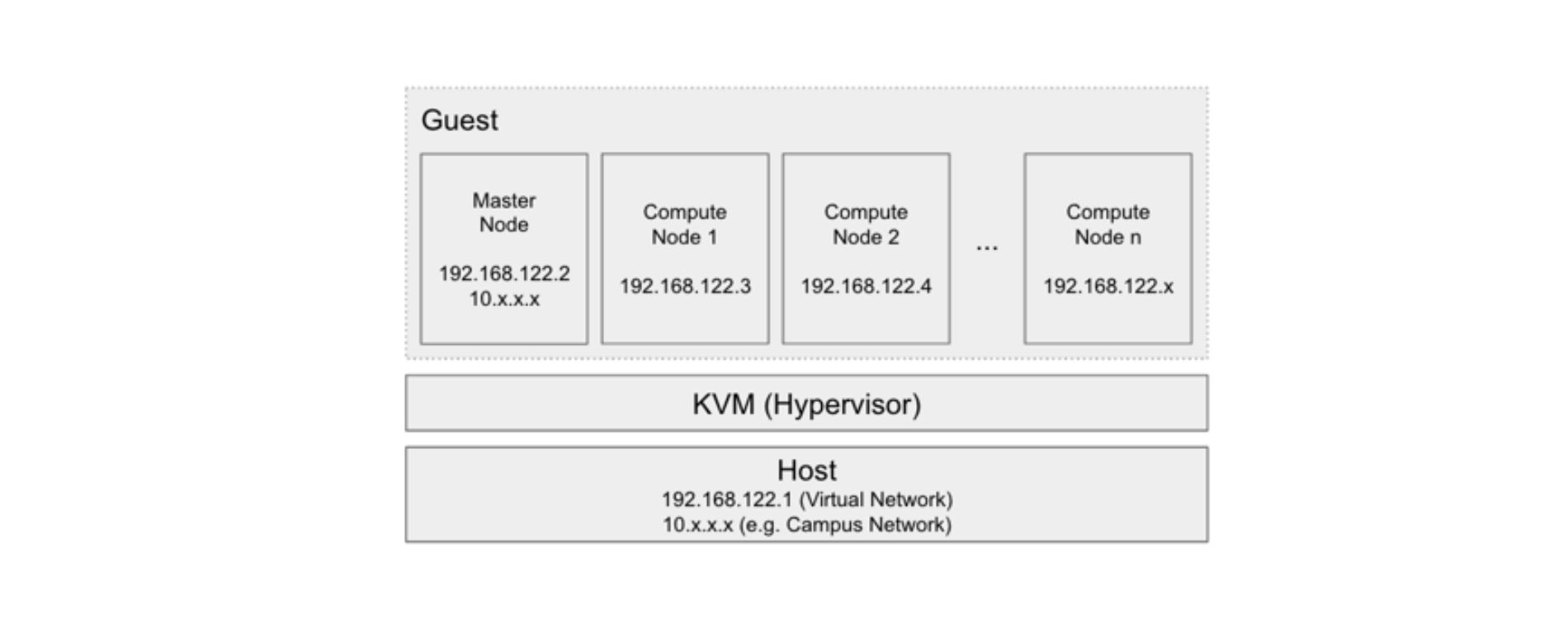

To install KVM, a host server with Fedora Core OS is required. This operating system provides the basis on which the KVM will be installed and configured.

One might wonder why an operating system is needed when KVM is described as a type 1 hypervisor, which is supposed to run directly on the hardware. In fact, KVM is a module embedded in the Linux kernel, which activates virtualisation. The operating system, such as Fedora Core OS in this case, serves as support for this module. Once KVM is enabled, it uses the hardware directly as a traditional hypervisor would.

To sum up:

- Host machine: the host machine runs under a Linux distribution, providing the environment necessary for KVM to be installed and used.

- KVM Hypervisor: once enabled in the operating system, KVM connects directly to the hardware and enables virtual machine management.

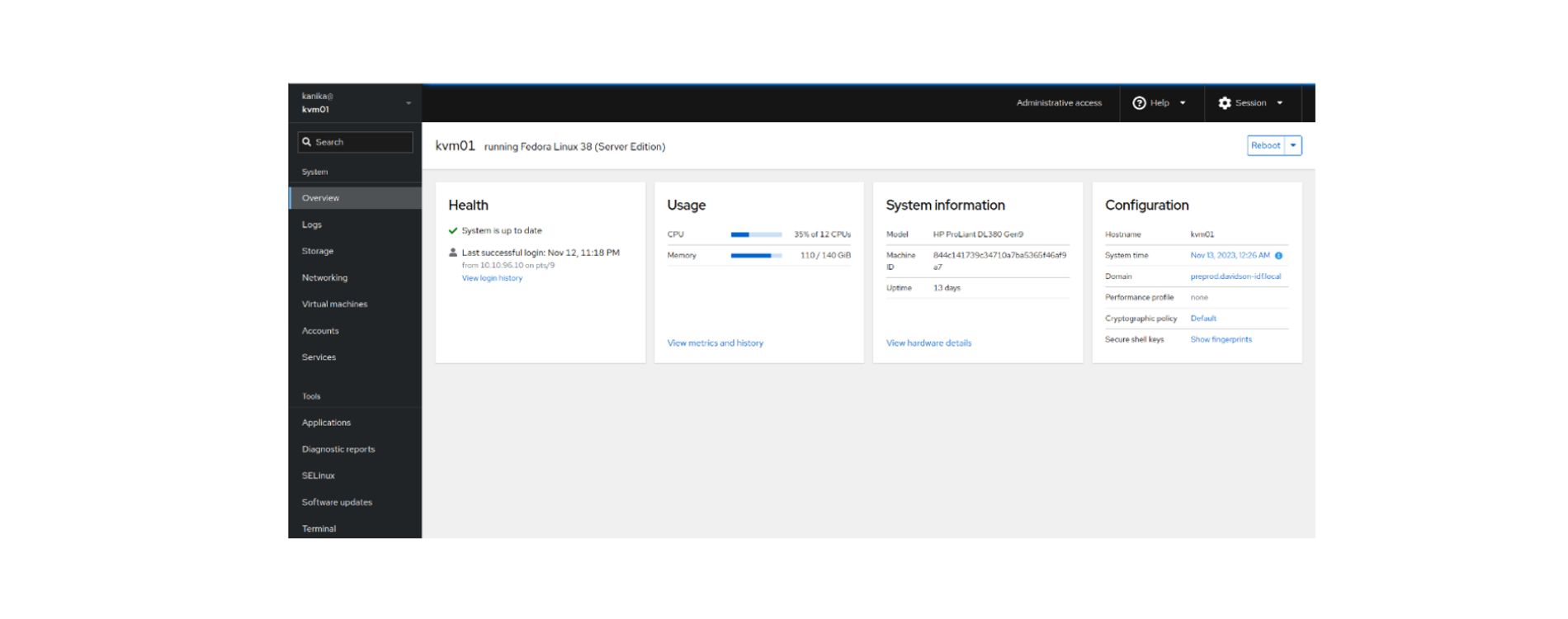

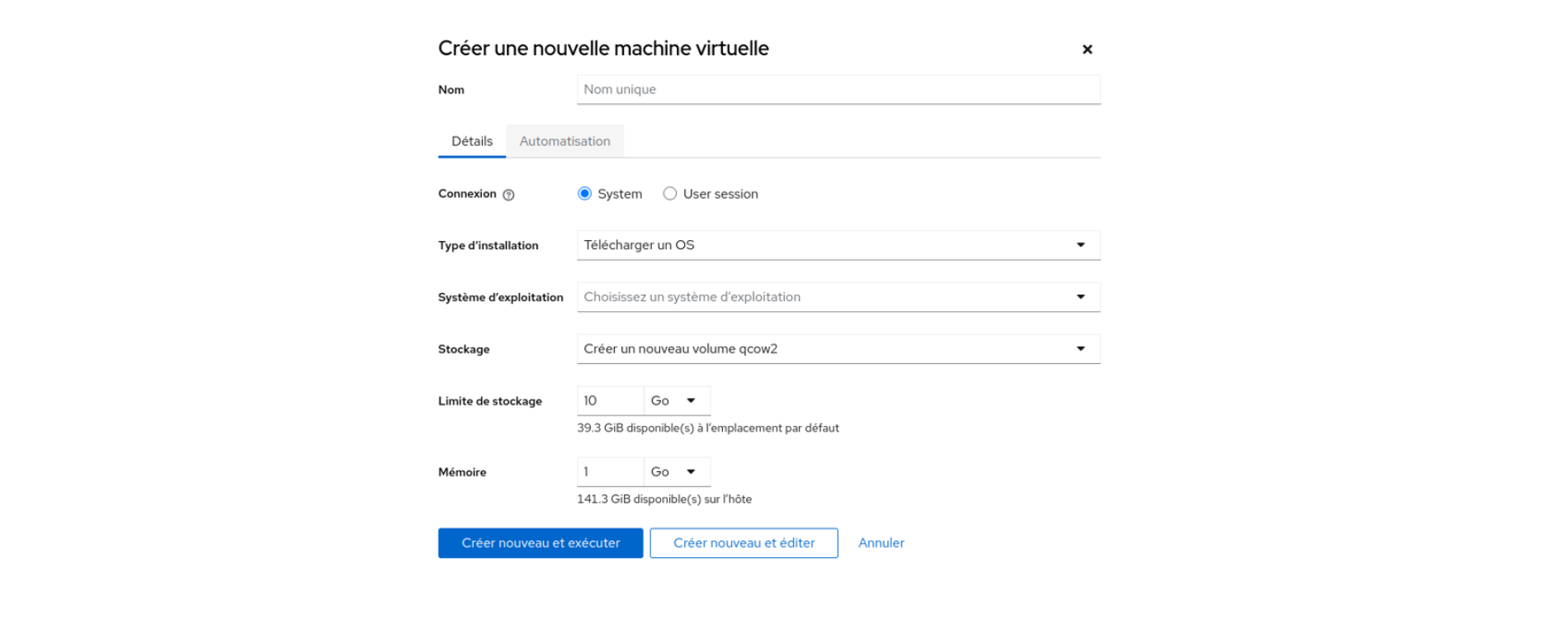

Managing an infrastructure through the Command Line Interface (CLI) can be complex and time-consuming, as it requires many commands to be manually executed with various arguments. For those who are not comfortable with the CLI or prefer a more intuitive approach, a graphical user interface (GUI) is often more suitable.

In our case, we have chosen to use Cockpit, a free, open-source tool that offers an interactive web interface. Cockpit allows system administrators to easily monitor, manage and troubleshoot Linux servers, providing a user-friendly experience.

To test compatibility between OKD and KVM, it is important to define the services to be installed, as well as their requirements in terms of resources (calculation, memory, storage) and their network specifications.

OKD installation procedure on KVM

- Prepare KVM host

Before you start, you need to prepare the host by installing and enabling the packages required for virtualisation. These are the key components

- qemu-kvm: an open source emulator and virtualisation package providing full hardware emulation.

- libvirt: a package that contains the configuration files needed to run the libvirt daemon, making it easier to manage virtual machines.

- virtinst: a set of command line utilities for creating and modifying virtual machines.

- virt-install: a command line tool for creating virtual machines directly from the CLI.

- bridge-utils: a set of tools to create and manage bridge-type devices that are needed for VM network configuration.

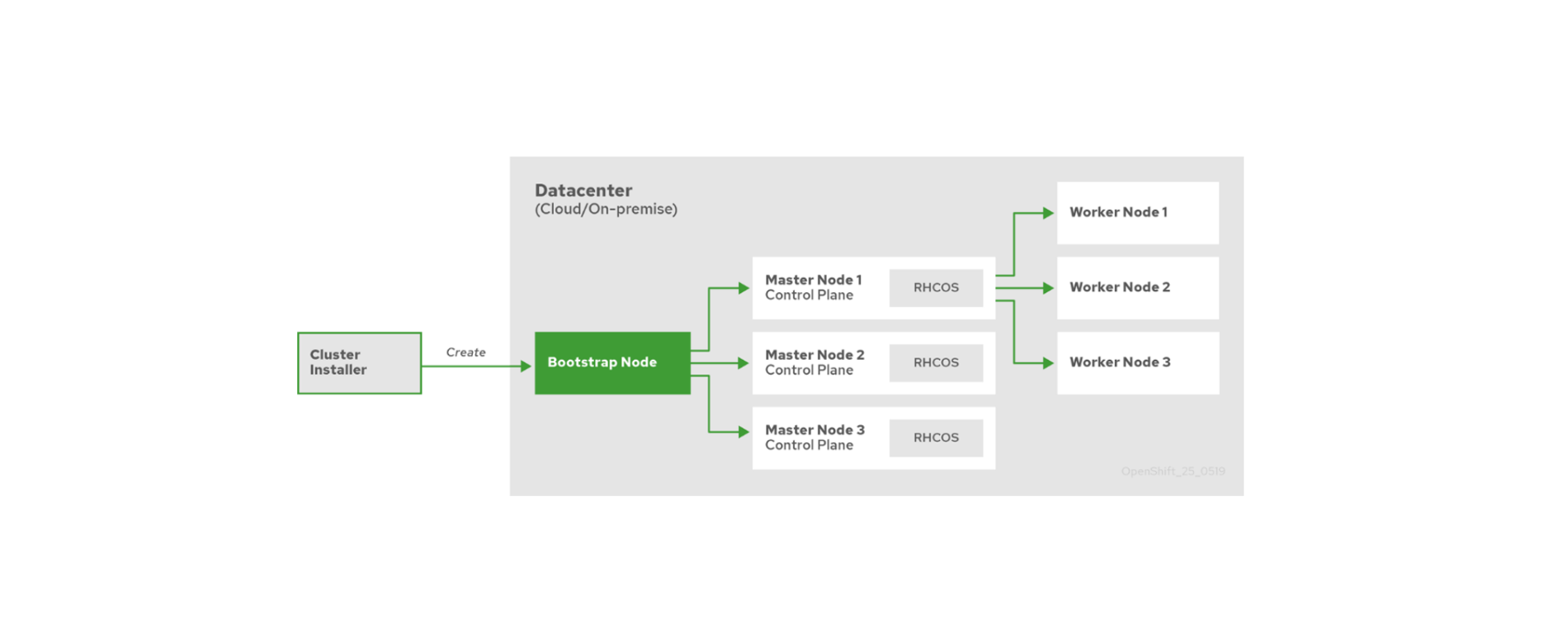

- Preparing the infrastructure for OKD 4

Once the KVM host is ready, the following steps prepare the infrastructure needed to deploy OKD:

- Create DNS records: configure DNS records for the OKD API, bootstrap node, as well as master and worker nodes.

- Download ISO images: get ISO images for Bare Metal (Fedora CoreOS 38 and Ubuntu 22.04) to create the VMs via the Cockpit interface.

- DHCP reservation: reserve IP addresses via DHCP for OKD nodes.

- Download the OKD installer and oc client: download openshift-install, the OKD installation tool, as well as the oc client to interact with the cluster.

- Installation configuration

Configuring OKD starts with preparing files needed for installing the cluster:

install-config.yaml file: This file defines the installation settings, such as the cluster name, installation platform (bare-metal in our case), number of master and worker nodes, IP address ranges, and the SSH public key for all nodes.

- Creation of manifests and ignition files:

- The install-config.yaml file is transformed into Kubernetes manifests (K8s) using openshift-install.

- These manifests are then encapsulated in Ignition files, which are used for the first start-up and automatic configuration of the cluster nodes.

- Ignition file specifications

When creating Ignition files, make sure to specify correctly:

- The SCSI Disk name: identify the disk to be used for the system.

- The Network Interface name: name the network interface for connectivity.

It is important to note that the certificates generated for the bootstrap expire after 24 hours, which means that the cluster installation must be completed within this time.

- Starting the nodes and finalising the installation

- Start nodes: launch the bootstrap, master and worker nodes using the created ignition files.

- Follow the installation: monitor the progress of the OKD4 cluster installation. The bootstrapping phase takes about 30 to 40 minutes.

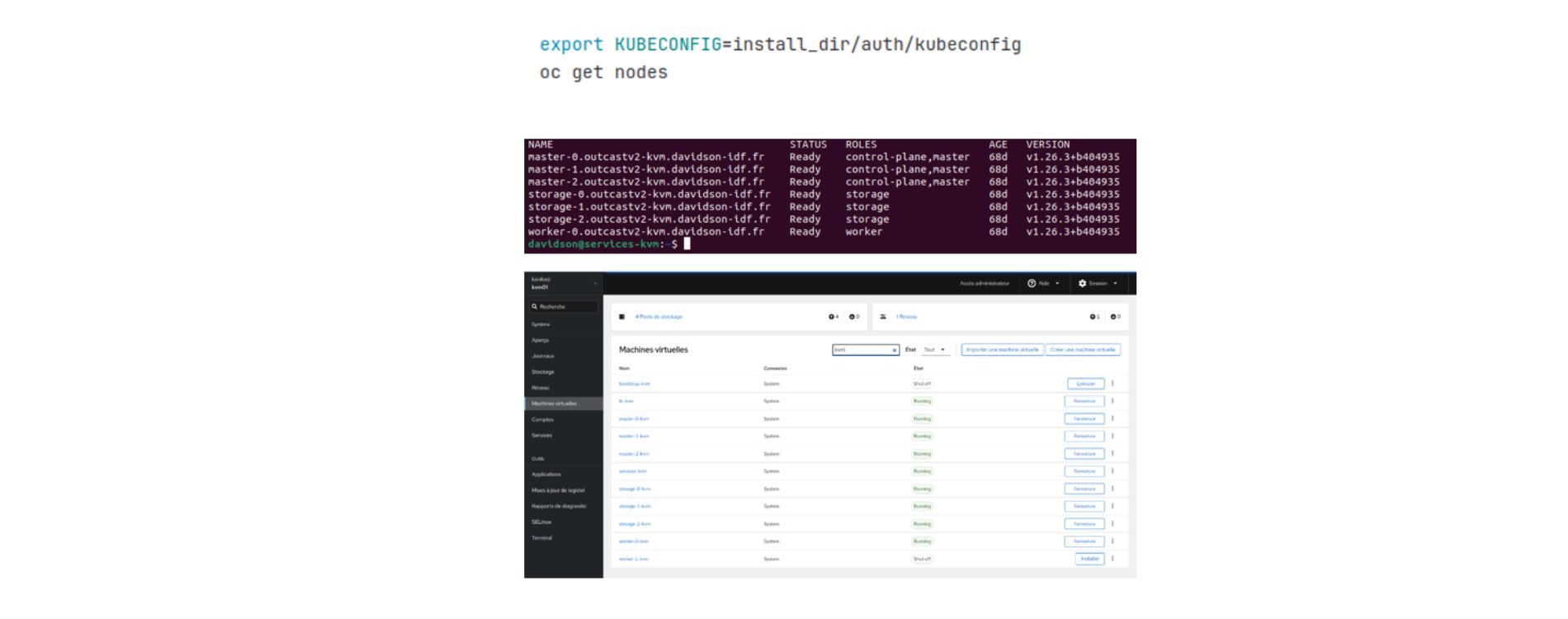

- Node validation: once bootstrapping is complete, ensure that the worker and storage nodes are operational.

- CSR Approval: approve certificate signature requests (CSR) for worker and storage nodes to finalise cluster installation.

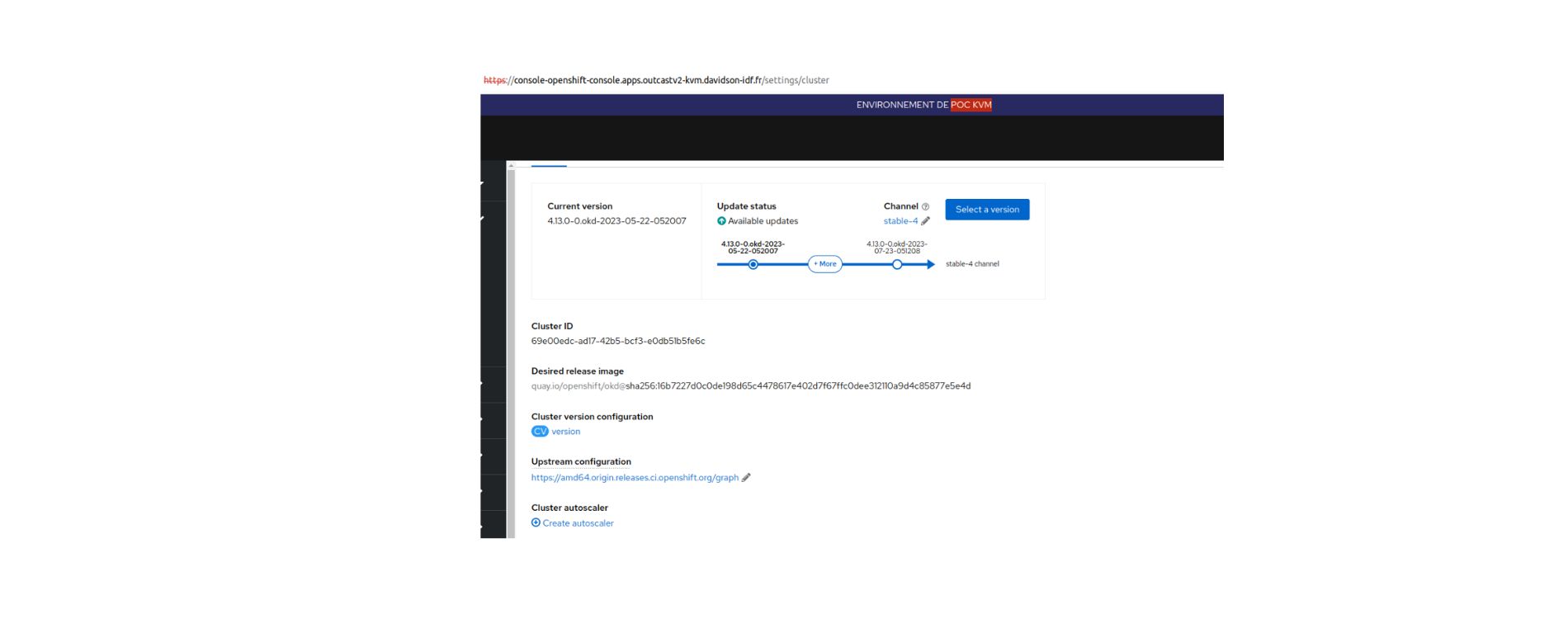

You’re all set! You have now installed OKD on KVM. This configuration gives you a powerful and flexible solution for managing your containerised applications.

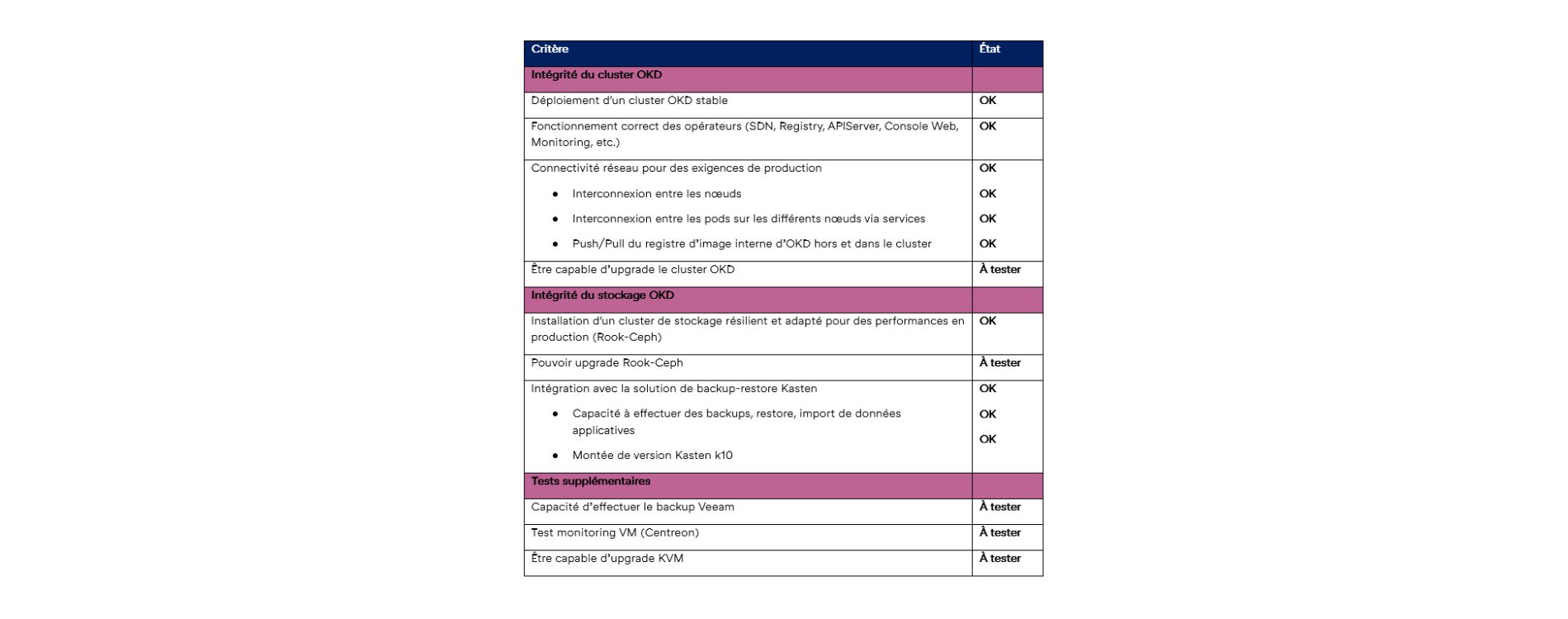

Testing and validation

To validate the proof of concept (POC) of the installation of OKD on KVM, we have created several validation criteria. These ensure that the cluster is stable, efficient and meets production requirements. Here is an overview of these criteria and their current status:

Comparison between OKD on VMWare and OKD on KVM

During our experience with OKD on VMWare and KVM, we noticed the following points:

Installing and configuring the OKD cluster

- Initial installation and configuration:

- No significant difference was seen between VMWare and KVM in installing the OKD cluster and configuration after installation. The processes were similar and the necessary steps were the same.

- Network interface issue:

- While preparing the cluster, we encountered a specific challenge regarding determining the network interface for each VM on KVM. Unlike VMWare where the network interface is standardised (ens192), on KVM, each VM had a different network interface configuration. We had to start up the VMs to accurately identify their network interfaces and adjust the ignition files accordingly for the first startup.

Performance

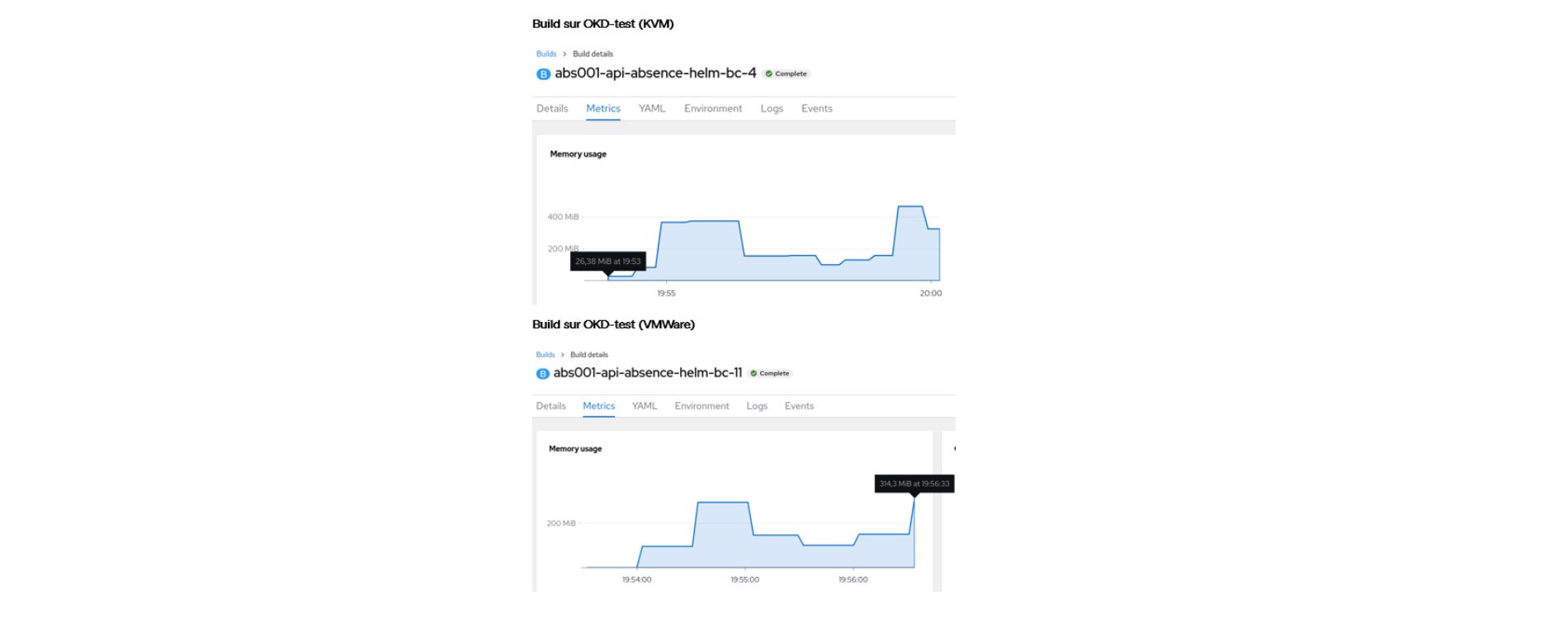

- Execution of builds:

- To assess performance, we ran several builds on our OKD test clusters on KVM and VMWare. We found that builds on the VMWare cluster were generally faster than on KVM. On average, in terms of time difference to complete the build, VMWare was about 3 minutes faster compared to KVM.

This comparison highlights that although the initial installation and configuration for VMWare and KVM for OKD is similar, there may be differences in specific aspects such as network configuration and performance during intensive applications such as builds. These observations can influence platform choice based on performance and resource management requirements.

Build on OKD-test (KVM)

Before drawing conclusions about performance, it is necessary to perform a sufficient number of tests while taking into account the availability of resources and the load on the workers nodes.

Conclusion

Our experience with the OKD test cluster on KVM showed very similar behaviour to that seen on VMWare. However, the choice between OKD on VMWare and OKD on KVM for production deployment will depend on the specific needs of your organisation, your current infrastructure, and available expertise.

It’s important to note that KVM configuration and management can be more complex than VMWare, requiring more skills and time for full mastery.

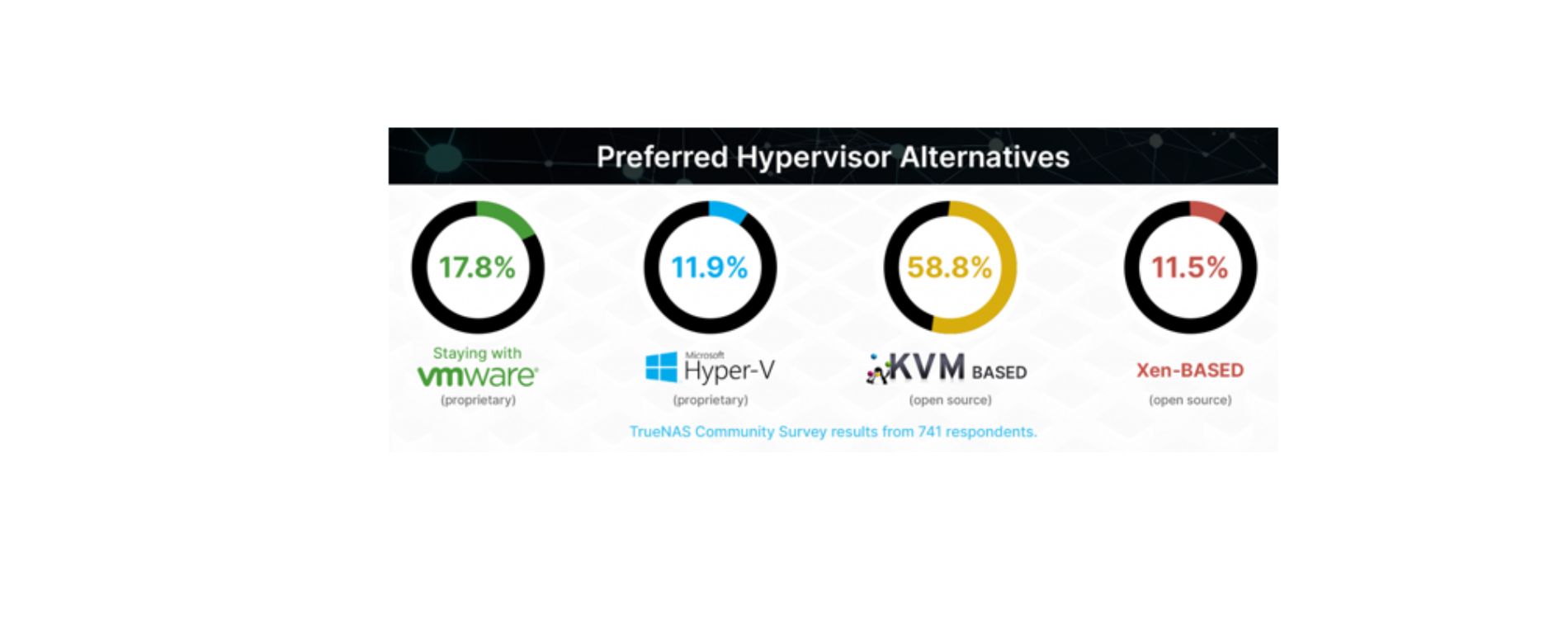

After VMware was acquired by Broadcom, a recent community survey with over 700 people replying showed that 58.8% of them considered KVM-based hypervisors to be their first choice. This trend is reinforced by increasing support from leading public cloud providers such as Azure, GCP, and AWS, which now offer migration solutions for VMware workloads to their respective cloud environments.

One example is the state of Alaska, which successfully migrated legacy VMware workloads to Azure VMware Solution (AVS) in less than three months. AWS also offers VMware Cloud on AWS, while GCP offers Google Cloud VMware Engine (GCVE) with negotiated VMware rates until 2027.

By supporting KVM as the primary hypervisor for its entire virtualisation product portfolio, Red Hat continues to actively improve core code and contribute to the KVM community. Openshift, based on Red Hat Enterprise Linux (RHEL), also uses KVM as the underlying infrastructure.

Regarding backup with Veeam, although Veeam does not directly support KVM at this time, it supports Red Hat virtualisation (RHV) based on KVM. After Broadcom acquired VMware, Veeam also began testing its backup solution on Proxmox, another KVM-based open source virtualisation platform.

To sum up, it is essential to rethink our virtualisation strategies to maximise operational efficiency, drive innovation, and ensure prudent financial management in an era of rapidly deeloping virtualisation technologies.